Linear Regression Equation

- When rewritten for a simple linear regression model, we write it:

Loss Function

- The goal of linear regression is to find the values of

- We quantify this using a loss function called Mean Squared Error (MSE), calculated as:

- All models have a loss function, to "fit" a model is to minimize the loss function.

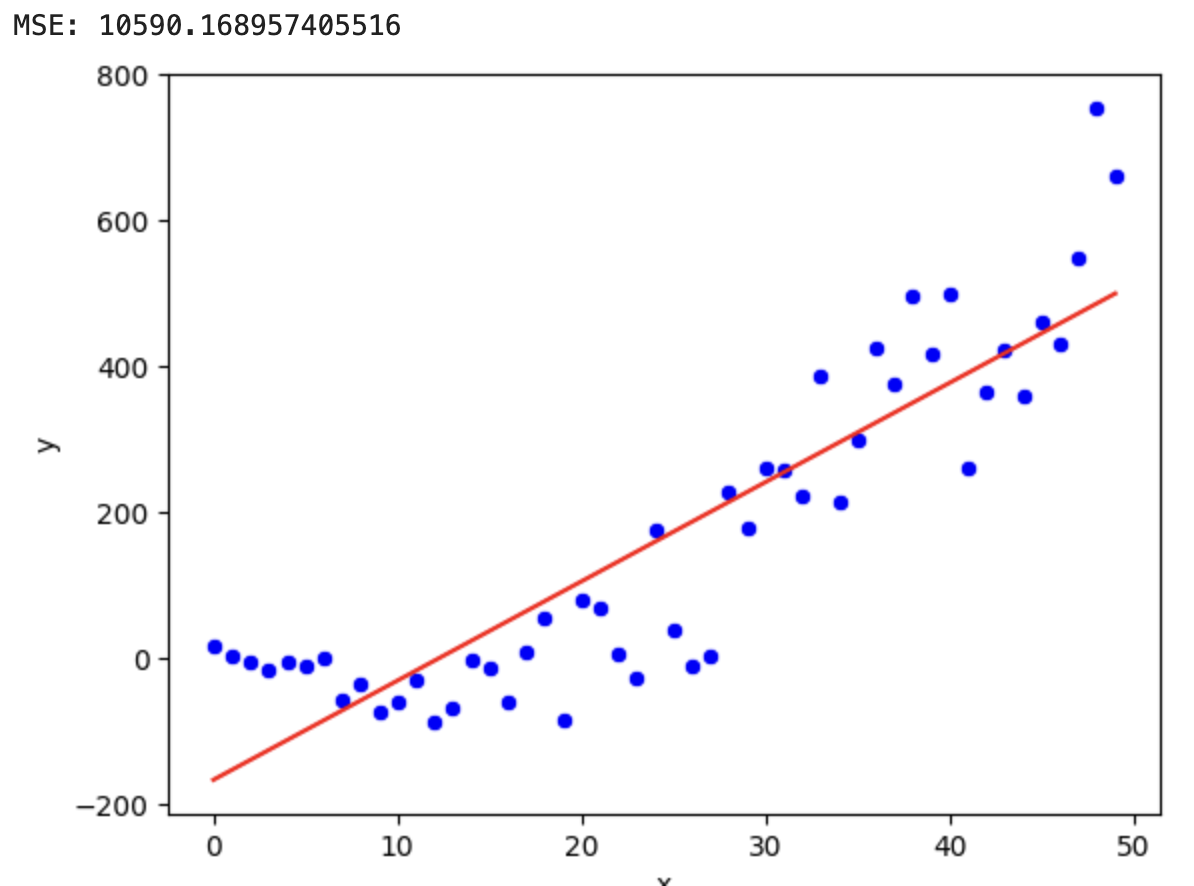

Visualizing Linear Regression

- The line of best fit minimizes the vertical distances (errors) between the observed points and the predicted line.

- The sum of these squared distances is what we aim to minimize using the loss function.

Why MSE Instead of Mean Absolute Error (MAE)?

-

- MSE penalizes larger errors more than MAE.

- Squaring the errors emphasizes larger discrepancies, making the model more sensitive to outliers.

- MSE is differentiable, which makes it easier to optimize using gradient-based methods.

Fitting a Linear Regression

Normal Equation

- For small to medium-sized datasets, linear regression can be solved using simple matrix math:

- For larger datasets (and for other algorithms we'll go over later), Gradient Descent is used.

- For this class, we'll just use SKLearn.

Multiple Linear Regression

- For multiple linear regression, the model includes multiple independent variables:

Exercise

Linear Regressions Revisited

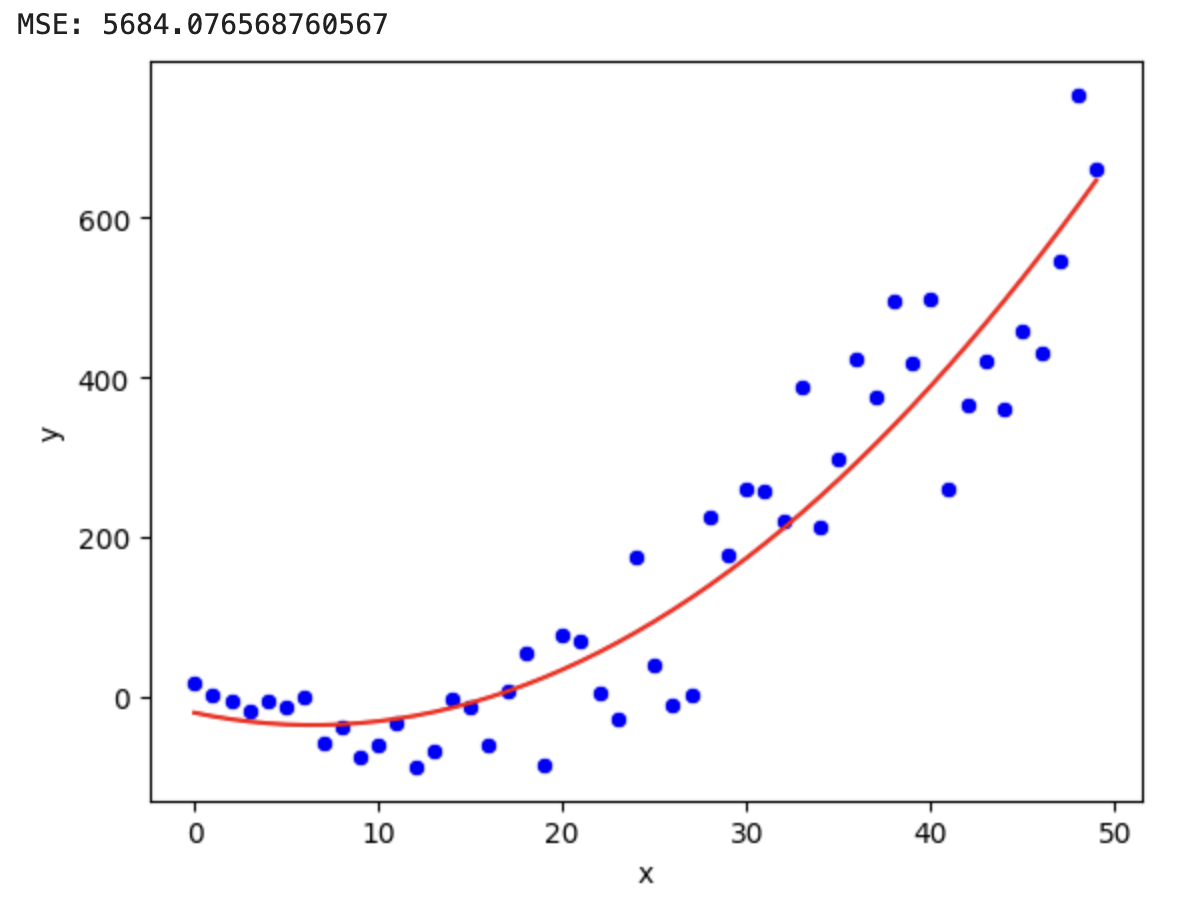

What is Polynomial Regression?

- Extension of linear regression to capture non-linear relationships.

- Fits a polynomial equation to the data

Polynomial Regression Equation

Feature Transformation

- Transform

- Example:

- Original feature:

- Polynomial features:

- Original feature:

Model Training

- Similar to linear regression but with polynomial terms

- Minimize the sum of squared errors (SSE)

- Example:

Overfitting and Underfitting

- Underfitting: Model is too simple, misses the pattern

- Good Fit: Model captures the underlying pattern without noise

- Overfitting: Model is too complex, captures noise

Underfit

Good Fit

Overfit